| Resurrection Home | Previous issue | Next issue | View Original Cover | PDF Version |

Computer

RESURRECTION

The Journal of the Computer Conservation Society

ISSN 0958-7403

|

Number 90 |

Summer 2020 |

Contents

| Society Activity | |

| News Round-up | |

| Packet Switching and the NPL Network | Peter Wilkinson |

| Thoughts on the ICL Basic Language Machine | Virgillio Pasquali |

| Obituary: Margaret Sale | Stephen Fleming |

| Why is there no well-known Swiss IT Industry? | Herbert Bruderer |

| Ho Hum, Nothing to Do | Michael Clarke |

| 50 Years Ago .... From the Pages of Computer Weekly | Brian Aldous |

| Forthcoming Events | |

| Committee of the Society | |

| Aims and Objectives |

Society Activity

|

IBM Museum — Peter Short Current Activities The first couple of months this year were relatively quiet, with three curators taking vacation, two of who got stranded in Australia and New Zealand during March. Hursley closed for the duration at the start of March, with employees working from home, so we’ve not been back there since. Activities have been limited to website updates and thoughts for the future.

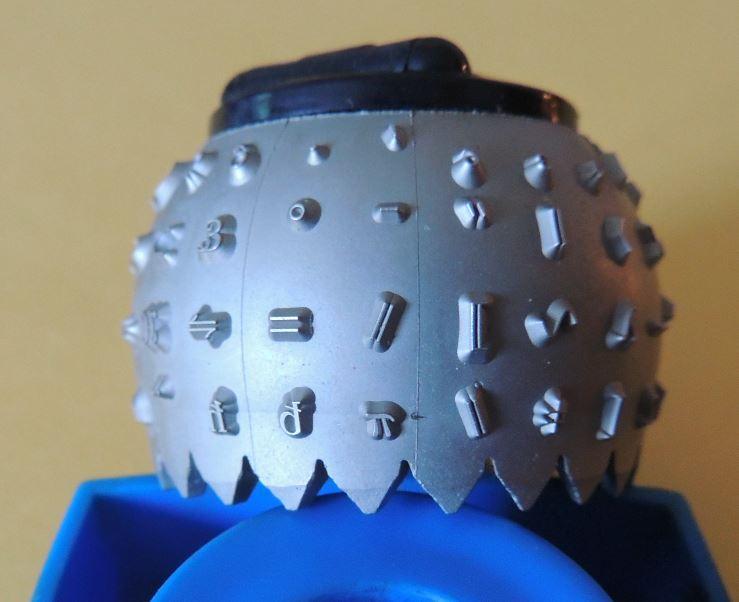

A new page has been added to the website, which tries to explain the typical processes and hardware involved in data processing using punched cards slx-online.biz/hursley/pcprocess.asp. It also highlights some of the hardware that in part contributed to the demise of the IBM Card. It includes a photo of a warehouse facility containing an estimated 4Gb of punch card storage. Each punch card offered a maximum of 80 columns, or bytes. One card box typically contained 2,000 cards for a maximum of 160K bytes, 6¼ boxes per Megabyte, or 625 boxes per 100Mb. Find your little processing job amongst that lot! We had an unusual enquiry from a lady in Ireland, whose father used to run a typewriter rental business. Her parents gave her the middle name “Pifont” after a Selectric typewriter golf ball that contained the π symbol, and she in turn has given her daughter that middle name. She wanted to show her daughter a picture of the golf ball and wondered if we could help. After digging through our collection I managed to find one such type-head and was able to send her a photo and a print-out of the complete Symbol font set.

The follow on plan was to see if we had a duplicate type head which we could send, but the dreaded virus thwarted that plan. Meanwhile we found a 3D printer file which I was able to update with a Greek font. My son ran this through his 3D printer, but as expected the quality was nowhere near good enough. We do have access to a resin printer in Hursley, so some day we should be able to produce this in a much higher quality and surprise Ms. Pifont. Other Last time I reported on the offer of a working System/3. The owner subsequently informed us that he’s not yet ready to part with it, and we may have to wait for illness or his demise. At least that gives us time to try and find £5K for shipping charges. We live in hope! We’ve also added a link from the museum website to IBM’s interactive “History of Progress” at www.ibm.com/ibm/history/interactive/index.html. This runs in your browser, windowed or full screen, using Adobe Flash Player, and gives a really interesting account of IBM’s history from pre-IBM 1890s through to the early 2000s.There’s also a desktop version and a PDF of the story to download. |

|

Analytical Engine — Doron Swade The Babbage technical archive held by the Science Museum has been reviewed sheet by sheet so the reference sources of AE-related content are now known. After a short hiatus Tim Robinson is carrying out the same exercise on the Babbage material in the Buxton papers held in Oxford. This material is of particular interest, not least because there are several essays Babbage wrote on the Analytical Engine while in Italy immediately after his visit to Turin in 1840 where he gave his first and only seminar-lecture on the Analytical Engine at a convention of mathematicians, surveyors and scientists. This rare engagement with others was a significant stimulus to Babbage so his writings immediately following this are of special interest. We have done two substantial photo shoots of this manuscript material, so digitised images are to hand. Part of the difficulty with is that the manuscripts are unsympathetically bound (text lost in the binding gutters), some material is undated, and the manuscripts are not bound in chronological order. We are also currently planning on the best way to document the findings so far, for wider dissemination – this a lesson learned from the material left by the late Allan Bromley who regrettably published only a small part of his deep understanding of the AE design. |

|

EDSAC — Andrew Herbert Separately the nickel delay line store and initial orders have been commissioned as sub-systems but are yet to be connected to the rest of the machine. (We have been using “silicon delay lines” during main control commissioning to simplify the task of fault finding). A video will shortly be uploaded to the project website showing the construction and commissioning of the store and a demonstration of the initial orders unit loading the initial orders into the nickel delay line store with a visual representation on the operator’s display screens. Having at long last seen a simple program execute reliably for many minutes, the main control team has gone back and tidied up the circuits and documentation to provide a base line for the next stage, namely the commissioning of orders that either read operands from or write operands to store (which is the majority of the order code). In addition James Barr is embarking upon a redesign of the transfer unit which deals with aligning operands for either short or long number arithmetic. (John Pratt designed and build an initial transfer unit, but we have subsequently found the unit needs to delay by at least one minor cycle in all cases and its re-alignment is required by one and a half minor cycles – hence the need to revisit the design.) Assuming the team is not too disrupted in the coming months we hope to make progress commissioning all of the arithmetical orders. James and his team are confident they are decoding and signalling to the arithmetic unit correctly and Nigel Bennée is confident the arithmetic unit calculates correctly so we hope, once operand reading and writing is working, we can progress to running useful programs. The tape reader and circuits for input are ready for testing and will follow checking out the arithmetic unit. The printer and circuits for output are in construction. There is beginning to be an ever brighter light at the end of the tunnel. |

|

Data Recovery — Delwyn Holroyd We have made some progress on the road towards being able to read LEO magnetic tapes. The ICT 1301 tape decks are based around an Ampex TM-4 transport, the same type used with LEO machines albeit with a different head. However as I reported last year, we now have an 8-track LEO head. The first step is a working deck and to that end Rod Brown and I spent a day in TNMoC’s storage facility examining the deck we intend to use, which according to Rod was the most reliable one when the ICT 1301 was in use. By the end of the day we had applied power and managed to feed tape forwards. Unfortunately backwards was not possible as the actuator is out of adjustment. Several other mechanical issues were evident and Rod has taken away various parts to work on. We had been hoping to have another go at it in April, but of course world events intervened. We did however manage to move the deck from storage to TNMoC just before the lockdown commenced. This will make it much easier to work on the deck when we are able to. Meanwhile another group has rescued an IBM System 360 Model 20 – you can read all about it here: ibms360.co.uk. We’ve been asked to attempt data recovery from a set of about 20 reels of tape that were found with it. For this work I used our HP data recovery deck that is fitted with a combined 7 and 9 track tape head. I managed to capture raw data from all the tapes on my last visit to the museum prior to the lockdown. Analysis is ongoing but it appears that all the tapes have been overwritten with blank header labels. In some cases I’ve been able to extract previously recorded data beyond the blank header. This consists of lists of names and addresses of what appear to be plumbing and sanitary engineering companies in Switzerland! There are also a few dates in the 1980s. So unfortunately it appears that the tapes were not used with the System 360, and do not contain the hoped for software. |

|

Turing Bombe — John Harper We are rather concerned that, even if TNMoC was allowed to open with some limitations in the future, our operators might not wish or would not be able to give demonstrations whilst at the same time keeping a social distance. |

|

ICL2966 — Delwyn Holroyd The failed 5V/75A store power supply mentioned in the last report has been replaced with the spare and the failed unit cleaned and repaired. The fault was an open circuit relay coil – the relay is part of the anti-surge circuit. The system console monitor and the 7501 terminal both suffered from intermittent loss of horizontal hold, and the remedy in each case was cleaning the adjustment pot. Visitors to TNMOC will be aware that one of the programs used to demonstrate the machine is Conway’s Game of Life, running under Maximop. Sadly John Conway died on April 11th. XKCD published a cartoon featuring a Life pattern of a figure that decays into a glider (xkcd.com/2293). I’ve added this to the repertoire of our demonstrations, ready to be installed when the museum is able to open once again. |

|

The National Museum of Computing — Kevin Murrell The museum is closed to visitors during the Covid-19 lockdown. We are not expecting to open until the end of May at the very earliest, but it will take a long time to build the visitor numbers back to the point before the lockdown began. We have put two of the full-time staff and all the casual staff on furlough until the end of May, which may yet be extended. Our museum director, Jacqui Garrad, is not on furlough and has worked tirelessly keeping the museum looked after. Andrew, Jacqui and I are pursuing all the funding opportunities available and are beginning to have some success in raising new funding. Jacqui is keeping the flow of information and entertainment from the museum going via social media. Our hugely valued volunteers have been working remotely and supporting the museum as best as they can from home. I think we can expect many new and interesting exhibits to start to appear as “soon as this is all over’. |

|

Elliott 803 & 903 — Terry Froggatt

As you may remember, last year TNMoC was loaned an Elliott 920M by the Rochester Avionic Archives (one of three that they have), and you may wonder why I’ve not reported about it since. In fact there has been little activity on this 920M, and considerable activity on other Elliott 920Ms, which would usually fall outside my reporting brief. But the matters are related, and at present there is little to report from TNMoC whilst it is in lockdown, so here is the wider story. Firstly a recap. Anyone who has been to TNMoC will be familiar with their Elliott 903 “011y” (that I routinely report on), which is one of a handful of 903s still working in the UK. This is a mid-1960s desk-sized machine, many of which were used by research institutes and which later found their way into schools. The CPU is essentially identical to the military 920B which was used in Forward Artillery Control Equipment (FACE) and in the Nimrod Mk 1 submarine hunting aircraft. The 920M is a shoebox-sized equivalent, which was used from the late 1960s in the Inertial Guidance System of the ELDO Europa Satellite Launcher, and in the Navigation & Weapon Aiming Subsystem (NAVWASS) of the Sepecat Jaguar aircraft. I was a member of the Jaguar software team, and we initially had access to a 920M MCM2 with a 5µsec store, but we moved onto a 920M MCM5 with a 2µsec store as the code grew and for the production run of some 200 aircraft. The 903 or 920B was built from discrete components, many of which are mounted on matchbox-sized Logic Sub-Assemblies (LSAs), on a total of 76 boards measuring some 8” by 5”, which are all easily removed from their rack for diagnostic card swapping. Spares for almost all of the components are available, and we have a full set of engineering manuals and circuit diagrams. The 920M is a different kettle of fish. It is approximately 32lb of Araldite, holding some electronics in tight formation, good for vibration to 7.5g. Of course the instruction set is known, and we know that the logic portion is largely made from DTL integrated flat-packs, but we have no circuit diagrams. The sales literature talks of “easily swapped modules” which is fine if you know which one to swap and have some spares. The modules are wire-wrapped in, so swapping them to locate a fault is not really on, and you cannot tell much about what is in them without cutting them open beyond repair. So it was clear from the onset that getting a 920M working might be a challenge. Well after my time at Rochester, at least two more versions of the 920M were produced as late as the 1980s under the Marconi-Elliott or GEC badges (for export to overseas air forces), namely the 920M MCM7 and the 920ME, neither of which I knew anything about until a couple of years ago. The MCM7 uses the same construction as the MCM2 & MCM5, “built in three hinged sections for maximum servicing accessibility” opening up to form a Z or N. The 920ME is an almost contemporary plug-in replacement for it, but of completely different construction with space to spare. Gone are the Araldite modules, there is a small rack with just a few slot-in easily removed cards using AMD 2900 bit-sliced chips. Gone too is the core store, to be replaced by a CMOS memory and battery backup – remember that the flight program had to be retained between missions, and was only reloaded by wheeling a PLU (program loading unit) to the aircraft when the software was updated. So what has been happening? Those of you who were on the CCS trip to Munich in April 2017 will remember meeting Dr Erik Baigar, who is something of an Elliott enthusiast. He has owned two 12-bit Elliott airborne computers since 2004, and in 2016 he bought a 920ME from eBay (the first time I’d ever heard of them, see Resurrection 80) and, after a little trouble with the power supply and memory controller chips, he got it working. So when I visited him in 2017 I was able to run some test programs on it. Then, shortly after we received the RAA’s 920M MCM7, another 920ME and three 920M MCM7s turned up on eBay, which Erik purchased, sending MCM7 number 5343 marked JAGEX to me. Erik was able to get his (second) 920ME working without too much trouble, given that he already had a test rig. Getting the MCM7s working has proved to be more difficult, as we expected. Erik has worked hard on this since the start of 2020, whilst I’ve provided some support by “ringing through” 5343 (unpowered). Problems encountered include:

The standard XSTORE store tests, TicTacToe, BASIC , Countdown, and 8 Queens have all now run. So exceptionally well done to Erik! There is much more, and numerous photographs (far too many to include here) on Erik’s site at www.baigar.de/TornadoComputerUnit/TimeLine.html.

My photograph here shows 5343, with covers removed and sections slightly opened up. The core store is in the left section, and power is being provided via one of Erik’s milled plug inserts. The FlexoWriter to the right was one of those used to prepare the Jaguar Flight Program in around 1970. I was reluctant to power up 5343, knowing that neither of Erik’s MCM7s worked initially, and I only have limited test gear (an analogue multi-meter and a TTL logic probe, no oscilloscope), but 5343 did have a “serviceable 2/6/87” tag on it. So over Easter I assembled a test rig, getting -5.5v & lots of +5.5v (for the logic) from my own 903’s power supply, and getting +12v & +18v (for the store) from a laptop brick, all wired through the plug insert shown. Next I wired the control signals to a ThinkPad printer port, also simulating tape reader input and two bits of tape punch output. (A printer port is designed for 8-bit output, but not for 8-bit input unless it is attached to tri-state logic). I took the precaution of disconnecting those troublesome capacitors, and fitting some externally. Much to my amazement and luck, 5343 did power up. Even more amazing was that it contained a program, in fact, an issue of the Jaguar Flight Program. And it didn’t take long to find the “present position” and “first waypoint” in it, both at 17.677°N, 54.028°E. Look this up if you want to know where this computer was last used.. But it is rather odd that this once-classified code was not wiped. (I arranged for an issue of the JFP to be de-classified back in 2016, see Resurrection 72). Excitement was somewhat tempered by the discovery that ⅛th of the store was zero (specifically the words 64 to 127 of every 512 words) but it should be possible to fix this using just one store module from the MCM7 which Erik is now using for spares. Possibly more worrying is that 5343 only runs for long enough to upload a handful of words before I have to recycle the power: I may need to investigate those capacitors. So where are we with the TNMoC’s 920M MCM7 number 88, which Andrew Herbert is currently looking after? Well, just as soon as lockdown is over, we should be able to test it with my breadboard power rig and ThinkPad, and then we can decide what to do next. We do now understand how most of the store modules function. The logic sections (especially how the microcode is implemented) remain largely a mystery, but luckily they seem to be more reliable. Finally if anybody out there does have the circuit diagrams, please do let us know! |

|

Software — David Holdsworth LEO III I’ve checked the Leo Society’s LEOPEDIA and the CCS links are now correct. Ken Kemp and Ray Smith are working on sorting out more demonstration material for Leo III emulation. I am encouraged by reports that Delwyn’s Data Recovery activities are close to reading Leo III tapes. The big hope here is that we shall find the CLEO compiler. The latest emulator for Leo III, which introduces separate Java implementations of the Leo III console and the Leo III printer, is still not available for Windows. There is low-level activity towards getting a clean resolution of of the incompatibility between Microsoft’s implementation of TCP/IP sockets and everybody else’s. KDF9 Although Bill Findlay and I have been making progress with KDF9 directors, there is as yet no new end-product in that area. Bill’s version 4 of ee9 has been compiled for Intel Linux in addition to Bill’s Mac version, so it can now be run under the Windows Subsystem for Linux (WSL) Experiments using tesseract 4.0 (the GNU OCR program) on scans of KDF9 documentation have started to yield fruit. The current state of play can be seen at sw.ccs.bcs.org/KDF9/SRLM-ocr/. Although tesseract does not retain the spacing within a line, I have been quite successful in experimenting with code to restore the left-hand margin. There has been very little manual editing in producing the above documents. There will be more of them in the future. Atlas I The Atlas I emulation project has been dormant for some time. However, the present situation has afforded Dik Leatherdale with the opportunity to make some progress. The emulator “normally” performs input/output to from and to files in the (Windows) host machine but an inauthentic facility was added many years ago to allow simulated card punch output to be displayed on a desktop window while the program is running. To this has now been added the contrary facility to accept data interactively as from a simulated card reader. Although a working version of the Brooker-Morris Compiler Compiler was implemented some years ago, there has been no attempt to make use of it. But now a small demonstration programme has been successfully created. The program syntactically analyses a conventional (integer) mathematical formula and calculates the result. All in less than 120 lines of code confirming the genius of the original concept. |

|

ICT/ICL 1900 — Delwyn Holroyd, Brian Spoor, Bill Gallagher ICT 1830 General Purpose VDU Continuing the work since receipt of the copy of TP4094: Visual Display (courtesy of the Centre for Computing History, Cambridge) has resulted in an almost completed change to drawing characters using what is likely a close analogue of the original method. In addition, all of the test/demonstration programs we have now appear to run satisfactorily under GEORGE 3 running on the 1904S emulator. Work is still on-going to reverse engineer the programs in order to recreate the original subroutine libraries as far as it is possible. ICT 1905 Emulator The 1830 has been added to the 1905 emulator, the only known installation of an 1830 was with a 1909 in Berlin. Most problems with the 1974 magnetic tape emulation are now fixed, certainly under E4BM, further E6RM testing is needed. In addition a few minor bug fixes/enhancements made. E4BM Executive for 1905 A new executive package to support the 1830 is currently being written, the original package is lost to the mists of time. This package is being based on the existing 1938 Interrogating Typewriter package as the requirements/functionality are similar. Currently working modes are: open, close and write to 1830. The read and combined write/read modes need to be completed for full functionality. We can now successfully generate a new E4BM under E4BM. ICL 1904S Emulator The 7930 scanner emulation is now able to write data to its lines. Reading data is not yet working. A lot more work and testing is required. In addition a few minor bug fixes/enhancements made. Batch Application Demonstration System With the application of WD40 to Brian’s COBOL memories further progress has been made on an Order Processing system (mark 1) for a fictitious mail order company. The purpose of this system is to show what was required to process data back in the days when your input medium was cards, master files held on magnetic tape and everything processed in batch runs. This is very much an on-going longer term project as a reminder of how things used to be, before the days of databases and online access. Once the current magnetic tape mark 1 system is completed, a mark 2 is envisaged moving the master files to disc, then a possible mark 2A version introducing VDUs and online order entry. ICL 1900 Website (www.icl1900.co.uk) More manuals added to the Programming and Applications sections. ICL PF50 Further to the article by John Harper in Resurrection 89, Brian wishes to make it clear that he covered the cost of shipping the E4BM documentation to Bill for scanning – many thanks to Jacqui Garrad at TNMoC for her assistance in this matter. |

|

Harwell Dekatron/WITCH — Delwyn Holroyd The machine has been running well since the replacement of the HT power supply choke. A number of failed store anode resistors have needed replacement plus a coupling diode. The start switch on the control panel has also been replaced. |

CCS Website InformationThe Society has its own website, which is located at www.computerconservationsociety.org. It contains news items, details of forthcoming events, and also electronic copies of all past issues of Resurrection, in both HTML and PDF formats, which can be downloaded for printing. At www.computerconservationsociety.org/software/software-index.htm, can be found emulators for historic machines together with associated software and related documents all of which may be downloaded. |

News Round-Up

|

Tim Denvir writes to point out that this year is the 60th birthday of Algol 60. He opines that “Algol 60 at 60 is a pleasing assonance”. We covered Algol 60 in some detail on the occasion of its 50th in Resurrection 50 – more assonance – so this year we just note it in passing with a smile. 101010101 Guy Haworth is researching the AEG-Telefunken TR4 computer of the 1960s. If readers have any documentation beyond that held at Bitsavers then Guy would be grateful. Contact via the editor please. 101010101 There have been a number of passings of notable IT people. Margaret Sale is remembered below, but sadly there are more –

101010101 Brian Aldous writes to tell us that the treasure trove which is “Communications of the ACM” is now freely available at dl.acm.org/loi/cacm. Stretching back to January 1958 and forward to the current issue it forms an unparalleled record of the development of our discipline. |

Subscription SubscriptionsThis edition of Resurrection is the last in the current subscription period. Go to www.computerconservationsociety.org/resurrection.htm to renew for issues 91-94, still at the modest price of £10. New subscribers are, of course, welcomed. |

Packet Switching and the NPL NetworkPeter WilkinsonAt the back end of 1966, Donald Davies, then Superintendent of the Computer Science Division of the National Physical Laboratory, was authorised by NPL management to set up a small team to investigate the feasibility of packet switching (see later) as a means of interconnecting computer services. Davies had first proposed the basic idea in a short memorandum published in 1965.

Originally, Davies had been recruited to NPL in around 1947 to act as an assistant to Alan Turing, who was to join the laboratory from Bletchley Park in order to design and develop an early computer. Turing quickly became disillusioned with the civil service and departed for academia, leaving Davies as a key member of a team aiming to realise Turing’s outline design ideas, first in the form of Pilot ACE, which later transformed into the full ACE (and also spawned an early commercial machine, DEUCE). ACE was still providing a computing service to the NPL in 1966 although by then it had been joined by the English Electric KDF9. By the mid-1960s the first generation of computers was being replaced by more powerful ones which did not require a team of hardware engineers to keep them running 24/7 (remember “powerful” is a relative term, probably still less than a modern wrist watch!). New techniques such as virtual memory and time-slicing were coming into play, allowing machines to run several jobs (programs) simultaneously. In the USA around this time, Project MAC was being developed at MIT to allow computer users direct access from terminal devices (teleprinters or even display/keyboard combinations) to load and run programs under their own control and receive the resulting output directly rather than via some intermediary operator. Some of these terminals were to be remotely sited and connected via dedicated landlines hired from one of the telephone service providers. The nascent but growing computer services industry was also interested in such communications services so that, for example, rather than collecting application data at various local sites, storing it on magnetic tape and then transporting said tapes by road to a processing centre, the data could be transferred directly over dedicated communication links to a processing centre. Such communications facilities were being provided by local telecommunications operators whose facilities were primarily aimed at speech (telephony) traffic. A dedicated ’phone line would have a modem at either end to match the digital traffic to a speech circuit and would, at that time, typically run at 600 or 1200 bits/second, far too slow for the transfer of large files of data or to enable high speed interactions. Davies recognised that speech networks, apart from having too little data bandwidth, were badly matched to the needs of such bursty data traffic and that the evolving situation called for a different type of network that could allow long messages (e.g. files of data) to be transferred between multiple sources and destinations while at the same time allowing fast responses for real-time interactive traffic. A speech network dedicates a circuit for the duration of a call whether or not there is meaningful traffic on that circuit, while setting up and clearing down each circuit then took several seconds, far too slow for interactive traffic. In his 1965 memorandum, Davies envisaged a dedicated network of high-speed links interconnected via switching centres (“nodes”), themselves based on small computers, that would transfer short message blocks (which he named “packets”) from node to node across the network. Each packet would include source and destination addresses, error correcting code and some limited management information. The nodes would be provided with routing tables to ensure that each packet was directed along the most efficient route, with alternatives to cope with failure situations. The packet switched network can therefore be regarded as a mechanism for transferring streams of interleaved packets simultaneously between multiple sources and destinations; because packets are short and link speeds high, both good transfer rates and short response times are enabled. Originating and receiving computers, themselves connected directly to the packet switches, would include extra management information in the packet data field to allow them to, for example, collect a number of packets together into one longer message by means of a message identifier and a sequence number. The packet switching network would be entirely transparent to such higher-level protocols. Another very significant advantage of the packet switching technique is its flexibility. As packets are held in the memory of each node computer before being forwarded to the next, it becomes relatively simple to upgrade the network by increasing link speeds in a piecemeal fashion. Adding extra links, nodes and user computers mainly becomes a matter of updating the routing tables. This flexibility has been evident in the modern internet, which has grown enormously in terms of speed and capacity yet in a manner almost invisible to its users. The team Donald Davies assembled in 1966, under the management of Derek Barber, consisted of Roger Scantlebury, Keith Bartlett and Peter Wilkinson. Its remit was to undertake a feasibility study of a possible UK national-scale packet switched network comprising 12 nodes covering 12 major centres (all of which had universities with significant computing facilities). The inter-node links were assumed to run at 1.5 Mbits/sec based on a planned trunk network upgrade by the Post Office. The Post Office at that time was a department of government providing both the mail/telegram and speech telephony services. The feasibility study made an outline design of the link protocol and routing algorithm, considering three possible configurations for the node computer, with maximum, intermediate and minimal link hardware to assist the node computer to run the protocol, input and error-check the packets. At the same time the node computer software processing the packets and handling their routing was sketched and its performance estimated for a then typical process-control minicomputer. The intermediate configuration proved most cost effective, with the node computer capable of handling around 250 packets/sec. Using this data, a simulation of the complete 12-node network was developed (by Roger Healey) and its performance estimated for various levels of offered traffic. This gave some idea of the overall throughput and response time achievable using the full network, which were generally acceptable for moderate traffic levels. However, as offered traffic ramped-up, the worrying discovery was made that the network became saturated and throughput collapsed. Although a shock at the time, with the benefit of hindsight it is obvious that something like that must happen. The simulated network is overconnected with several possible paths between most source/destination pairs (essential if the system is to be resilient to link or node failures). Normally a packet held in any node is forwarded via the link giving the most direct route to its destination. However, when a long output queue is detected, the node forwards via an alternative link with a shorter queue on the assumption that it will thus reach its destination faster. This “myopic” alternative routing mechanism was adopted for simplicity since it does not require the network to develop an overall picture of system status. The consequence is that, just as the network is becoming busy, packets are being forced to take longer routes hence the amount of work needed to process the same level of traffic begins to increase. Donald Davies was concerned by this finding and, typically inventive, proposed a solution (which he termed “isarithmic”) to keep offered traffic levels within bounds, based on the use of permits (or tokens). No sender could introduce a packet to the network unless it possessed a token. Once a packet had reached its destination the associated token would be re-used locally or redistributed according to some algorithm. The number of tokens available in the network was limited in order to maintain moderate traffic levels. Obviously the isarithmic mechanism would need careful management in order to avoid overly restricting system throughput and in itself represents an additional system overhead. The issue of network traffic load management is a significant one which has a range of possible solutions. Donald subsequently pursued these questions by setting up a specific network simulation group under Wyn Price. The NPL’s more detailed network proposals were described in a joint paper which was presented by Roger Scantlebury at a conference in Gatlinburg in 1967, where a paper summarising the plans for a broadly similar US network, which became known as the ARPANET, was also presented by Lawrence Roberts of the Department of Defense, but without defining its intended communications technology. The upshot, resulting from discussions at that conference, was that packet switching was adopted for the ARPANET. The ARPANET of course became the principal forerunner of the Internet. In the USA there appeared no significant barriers, either financial, organisational or in motivation or skills, to taking these ideas rapidly forward and DoD contracted the engineering firm Bolt, Beranek and Newman to build the ARPANET packet switched communications network. In the UK, by contrast, such barriers proved insurmountable, at least in the short/medium term. Over the period 1965 to 1968 Davies had liaised closely with the UK IT industry and with major players such as senior staff in Post Office (Telephones), holding several conferences and meetings on the subject of data networking. Many individuals within the IT industry were strongly convinced of the need for a national network and indeed formed a lobby group (the Real Time Club) to promote such ideas. However, while showing definite interest in the technical concepts (to the extent that they later agreed to second one of their engineers, Alan Gardner, to the NPL team) the GPO was unconvinced of its economic viability, believing that speech traffic would always dominate data traffic. It was therefore reluctant to sanction use of its trunk network technology as the backbone for a national data network. The Ministry of Technology (NPL’s then overlord) was in no position to insist and passed the buck to NPL. That left only the possibility that the NPL team could demonstrate the feasibility of packet-switching by building a purely local network to cover the NPL’s own campus (then about 78 acres and half a mile in width). This newly proposed NPL network was to provide a range of computing services (such a storage for files) as well as basic communication facilities, so that many types of peripheral devices and existing computer services could be interconnected. Costs were to be covered from within NPL’s existing budgets and management sanction was obtained. In Davies’ early network proposals, connection of devices and services locally to each other and to the high-level packet network was to be made by an interface computer. Thus the task now was to fully define the functionality of an interface computer for the NPL and start its implementation, which began in earnest in mid-1968. The NPL campus was already provided with a backbone network of co-axial cables capable of rates of 1 Mbit/second, then incomparably faster than speech network modems. Consideration was being given to adopting a newly-designed British minicomputer, the Plessey XL12. The XL12 had an ideal input/output mechanism capable of attaching up to 512 terminal devices via a “demand-sorting” system which transferred data from attached devices directly into computer memory. Alas, a government-inspired reorganisation of the UK computer industry prompted Plessey to cancel further development of the XL12 in the summer of 1968. The NPL team had earlier undertaken an evaluation of available minicomputers in arriving at its decision to buy the XL12. The Honeywell DDP516 was chosen as next best, in part because it also had flexible direct-to-memory input/output facilities. This turned out to be a good decision, not least because the Honeywell engineers were interested in the project and willing to adapt its I/O mechanisms to NPL ’s requirements. Incidentally, the DDP516 had also been chosen by the ARPANET team as its packet-switching node at around the same time and for similar reasons. The first DDP516 arrived on site in early 1969 (another was later purchased as a backup). At a meeting in Barber ’s office in the autumn of 1968, early progress on the network development was discussed. Scantlebury and Bartlett had already been designing some aspects of the communications hardware and Wilkinson was formally tasked with producing the corresponding software for the DDP516. However, at that stage there was no detailed specification of the network ’s overall functionality and major aspects were still up in the air. Some decisions had already been taken regarding the transmission hardware, including the crucial one that the network should offer an interface to its subscribers based upon British Standard BS4421, in whose creation Derek Barber had played a major role. Its key features are that it is a fast parallel handshaked interface, the latter being essential for flow-control. During 1967/68, Scantlebury and Bartlett had designed a link control mechanism for use in the network, allowing reliable full-duplex transmission between a master/slave pair of “line terminals” at either end of one of the co-axial cables. Another task underway was the design of a multiplexing scheme to replace that lost with the demise of the XL12. The plan was to have 8-way multiplexers that could be stacked in up to three layers, permitting a theoretical maximum of 512 connected terminals. Each multiplexer contained a demand-sorter to arbitrate clashes between its inputs. Each multiplex layer added a three-bit address to its output to the next higher level, so the top level multiplexer would present to the computer a data byte from the originating terminal accompanied by a nine-bit terminal address. The non-standard Honeywell hardware (developed during 1969) would then use that address to store the terminal data in an allocated buffer in the DDP516 memory. Multiplexers could either be interfaced directly to their predecessor/successor using a parallel BS4421-like connection, or be joined via a serial link using a line-terminal pair. The system thereby offered considerable flexibility. The question still unaddressed was – how should the communication between subscribing terminals on the NPL network be organised? Packet-switching is a radical departure from the traditional telephone system whereby one subscriber makes a call to another, setting up a circuit for that purpose. Paradoxically perhaps, that was also seen as the natural way to organise the NPL network; if one terminal wishes to interact and send/receive data with another then the network should permit them to establish a virtual call. “Dial”, specify the destination “number”, if available, establish the “connection”, interchange data (in the form of packets, because that was how the central computer server was intended to operate) and finally end the connection when no longer needed. This approach was thrashed out by all concerned and made apparent the need for a third type of hardware module, a network termination unit. This was named the Peripheral Control Unit (PCU). The PCU offered the BS4421 interface to subscriber equipment and would itself interface directly, either to a multiplexer or to a slave line terminal unit. The PCU was equipped with a small specialised keypad comprising four buttons with lights, by means of which the operator at a subscriber terminal could establish and manage a virtual call to another. For simple devices a numerical keypad was also provided to allow input of a destination address. It was now necessary to alter the communication hardware designs so that both user data bytes and virtual call status information could be transferred to the central computer. A ninth data/status bit was added, so that in total an 18-bit unit would be received at the centre. With system design complete, it was possible for hardware and software teams to concentrate on their specific areas. Thus in early 1969, design turned to implementation. The hardware team under Keith Bartlett ’s leadership undertook final detailed specification, implementation and testing of master/slave line terminals, multiplexers and PCUs. Bartlett ’s team comprised Les Pink, Patrick Woodroffe, Brian Aldous, Peter Carter, Peter Neale and a few others. They also liaised with Honeywell in the adaptation of the DDP516 input/output controller. On the software side, Peter Wilkinson was assisted by Keith Wilkinson (no relation) and Rex Haymes. One downside of the choice of the Honeywell computer was that it came with very little software. There was an assembler/loader for its simple assembly (low-level) language, DAP16 and a real-time executive, which a quick assessment suggested was aimed primarily at process control applications (such as running a chemical plant). It did not offer an appealing mental model for how the software of the network computer should be organised. There were no readily available publications on communications software and the like, as this was such a new field, so Wilkinson was faced with a blank sheet of paper – actually a good place to start as there are no constraints! It was essential to find some general organising principles that would help to structure the task. In that, Wilkinson was fortunate. Before joining the network group he had worked in the programming research group, with Mike Woodger as a colleague. Woodger had joined NPL at about the same time as Davies, also to be an assistant to Turing. He had also worked in the ACE computer team and later became one of the authors of the high-level programming language Algol 60. He had subsequently continued as a member of a committee defining an even more powerful language, Algol 68, where one of his colleagues was the computer scientist Edsger W Dijkstra, then working at the Technical High School in Enschede. Dijkstra was a proponent of the notions of process and of hierarchy and had used these in the development of a model operating system, of which he had produced an elegant description, available through Woodger. A process is an abstraction, ideal in situations where a single central computer is dealing with an external environment in which many events can arise independently. External events can be represented by distinct processes in the computer; they share its central processor and other resources and communicate with each other in order to carry out the overall computing task required. All that is needed is a simple executive which ensures that each process is given a slice of computing and other resources. Hierarchically structured software is organised into distinct and well-defined layers in which each layer provides services to the layer above via a clearly defined interface. Wilkinson decided to structure the network computer software as a small number of processes, each carrying out particular tasks. It was further decided that these processes would communicate via an internal message-passing mechanism (by analogy with the network itself). An executive was designed with layers for process scheduling, message queue handling and memory management (dealing with packet buffer allocation to receive and forward subscriber traffic). The DDP516 generated an interrupt each time a status byte was received, which the executive software turned into a new message, queued for the appropriate process. The software for the various layers and processes was written using the high-level language PASCAL (related to Algol 60) and then hand-translated into DAP16 by the team. The software produced by this approach was highly modular, so that useful testing could be carried out on each unit in isolation. In this way, when the full system was assembled, most of the errors had been eliminated and the final integration testing was relatively painless. The network software came into operation in January 1970 and an informal network service was initiated, though at that time few if any of the services intended to be accessed via the network were available. However, once the system had reached an acceptable level of reliability, some performance measurement could be undertaken. The outcome was rather disappointing, as the switching computer was only capable of a maximum throughput of around 150 packets/sec. This was mainly a consequence of the high processing overheads introduced by the message-passing mechanism. Nonetheless the performance was adequate for the initial trial uses of the system. During 1970 various services and facilities were added to the network to make it attractive to its users throughout the Laboratory. The KDF9 computer service was connected, allowing remote access and job entry; a contractor was engaged to produce the software for a filestore service on another DDP516; a new information retrieval system, being developed by another group in Computer Science Division under David Yates, was linked-up to provide access to its users. While the NPL network was beginning to prove its worth, besides low packet throughput, another limitation was becoming more apparent: all subscribers, including computer services, were connected as simple terminals. If a computer service was to deal with several simultaneous users, it therefore required several network connections via PCUs, an inefficient use of hardware and an inflexible approach overall. It would be far preferable to allow the service computer to handle the virtual call mechanism by software and to provide it with a link to the network at the packet-level. These limitations led to a rethink and a plan to upgrade the network service from what in 1970 was described as the “Mark I” to a shiny new “Mark II”. Mark II came into full service in 1973 but that is another story, as they say. At a distance in time of over 50 years, memories fade, become unreliable and detail starts to blur. Needless to say, this account is not just made from memory. The NPL team made many publications along the way. Most particularly, in 1985/86 they invited Martin Campbell-Kelly then of Warwick University to meet with them, review all their activities, conduct interviews and produce a report overviewing the work of the communication network team over the period 1965 – 1975. That report has guided this article for Resurrection. [Data Communications at the National Physical Laboratory (1965-1975). Annals of the History of Computing , 9 (3/4), 1988]. That report contains many of the key references, for anyone wishing to follow up the details. On a personal note I look back on the NPL network project as the most exciting and fulfilling of my life, working with a team of excellent colleagues and doing something which was both fun and challenging, with a lasting impact. Of course, it is fair to say that no one, not even Donald Davies, had even the haziest idea of what the Internet would eventually become. It is also paradoxical to realise that while speech traffic certainly dominated the communications scene in the early 1960s, the boot is now completely on the other foot, as speech networks are now packet-based. I also think it is true that the Internet, and particularly packet-switching owes more to Donald Davies than it does to any other single person. In summary, the NPL campus network (Mark I) was the first digital local area network in the world to use packet switching and high-speed links. |

Thoughts on the ICL Basic Language MachineVirgillio PasqualiIn Resurrection 87 we published an account of the deliberations which led to the ICL New Range of the 1970s. Reader Mike Hore wrote to the author to discuss the reasons why John Illiffe ’s Basic Language Machine was not selected as the basis for New Range. The response is interesting and so is reproduced here. [ed] I cannot help feeling that you are asking the wrong person why ICL selected the Synthetic Option instead of the BLM. The Jury, in October 1969, spent more than a week secluded in Cookham conference centre, investigating the two proposals and interrogating people. The top 12 technical managers in ICL, under the chairmanship of Colin Haley, were certainly capable of forming their own opinion based on what the company needed at the time. I did not participate in their deliberations, for obvious reasons, and the Jury never published their detailed analysis or discussed their findings with me (I was not part of the Jury as I, like Roy Mitchell of the BLM option, was the leader of one of the two options on trial). But I remember that the choice was not predetermined. They looked carefully at both the options and arrived at their own conclusions, and clear recommendations. But I will tell you why I thought, and I still think, that they made the right choice. It is my own view, and I do not know if this is the reason why ICL did not adopt BLM. It can be summarised by relating a conversation that Mike Forrest and Gordon Scarrott had while we were having a drink at our local pub after work. Gordon said to Mike “Why are you building a road while you have a motorway available to you?” and Mike replied “If the motorway does not take you where you want to go, it is of little use”. BLM, conceived by John Iliffe while at Rice University in the USA, was a great technical idea that did not fit the market. (Many years later I had a similar experience with CAFS. A brilliant idea, and I insisted it should be marketed against the opposition of the Data Management team. It was not a success in the market, and we lost money and, more importantly, we wasted scarce resources. But even today we technical people talk of CAFS as a great product). I do not remember the technical details, but a few relevant facts are:

A number of articles in Resurrection 35, attempting to analyse why the UK computer industry did not succeed (in spite of the creativity of our technical people and excellent technologies), could be helpful to you in giving an overall perspective of the issues. One final point: when ICL failed to adopt the BLM in 1969, I told John Iliffe that he could seek another company to adopt BML. ICL would not impose any property rights and we would give it away for nothing. But, as far as I know, John could not find anybody interested, not even in the USA where there was plenty of capital to exploit innovation (while we were starved of capital and resources). |

Obituary: Margaret Sale

Stephen FlemingWhy is there no well-known Swiss IT Industry

Herbert Bruderer

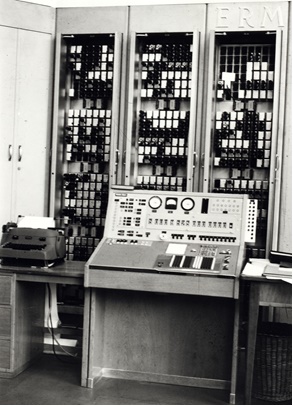

Computer science in Switzerland began in 1948 with the founding of the Institute for Applied Mathematics at ETH Zurich. Eduard Stiefel was the director, the two assistants were Heinz Rutishauser (chief mathematician) and Ambros Speiser (chief engineer). In 1950, ETH Zurich was the first and only university in continental Europe with a functioning punched-tape controlled computer, the Zuse Z4 (built in 1945, restored and expanded in 1949). In Sweden, the plugboard-controlled Bark relay computer was put into operation in 1950. The Swiss Polytechnic used the leased mechanical relay computer from 1950 to 1955. In 1951 the trio published a fundamental work on computer construction. One year later Rutishauser ’s postdoctoral thesis on automatic programming (program production with the help of the computer) was published. He was one of the fathers of the Algol programming language. In 1956, the self-built Ermeth electronic vacuum tube computer was operational. Despite these good conditions, no IT industry emerged in Switzerland in the 1950s. This has often puzzled scholars. What were the reasons?

Bern Hasler AG wanted to market Ermeth worldwide The company Hasler AG, Berne (today Ascom), was involved in the construction of the electronic computer and also provided financial support. It has only been known for a few years that Hasler wanted to market the expensive machine worldwide. To this end, it concluded a licence agreement with the ETH in 1954 for the magnetic storage drum, which it had not helped to construct. The development of the drum memory device had caused great difficulties. However, Speiser left the ETH at the end of 1955 – before the completion of Ermeth. Surprisingly, he became the director of the newly founded IBM Research organisation near Zurich. This thwarted Hasler ’s plans. At this time, two other US research laboratories were coming to Switzerland in addition to the blue giant: Radio Corporation of America (Zurich) and Battelle (Geneva). IBM recruited specialists with high salaries. Speiser ’s departure for the competition led to a discord between Hasler and ETH. On the instructions of the President of the Swiss School Board, Speiser had to make an appointment with the General Director of the Berne company. Hasler lost interest not only in the reproduction of the magnetic storage drum, but also of the entire computer. This background has only recently become known thanks to finds in the ETH university archives. A detailed account of the dispute between Speiser, Hasler and ETH can be found in Milestones in Analog and Digital Computing, volume 2 .

Both machines have been preserved. The Z4 is now located in the Deutsches Museum in Munich, the Ermeth and its drum memory are on view in the Museum für Kommunikation in Berne. Later attempts also failed Even later it was not possible to build Swiss computers in large numbers. This is true e.g. of the Cora transistor computer (1963) made by Contraves, the Lilith workstation (1982) of Niklaus Wirth and the Gigabooster super computer (1994) by Anton Gunzinger. Today, in addition to Logitech, there are numerous research centres in Switzerland run by Internet giants such as Apple, Facebook, Google, and Microsoft. This article appears by permission of Communications of the ACM and the BLOG@ACM (see cacm.acm.org/blogs/blog-cacm/242462-why-is-there-no-well-known-swiss-it-industry/fulltext . Photos (except that of the drum) are courtesy of ETH Library, Zurich, image archive. Herbert Bruderer is a retired lecturer in computer science an ETH Zurich (Swiss Federal Institute of Technology) and a historian of technology. He was co-organiser of the International Turing Conference at ETH Zurich in 1912. Email: bruderer@retired.ethz.ch . |

||||||||||||||

Ho Hum, Nothing to DoMichael ClarkeThis is perhaps just a whimsical reminiscence of days gone bye, but one never knows. Let me start with what prompted this look at computers with nothing to do. I am a Beta tester for a commercial gaming simulator product, I have a high-end Intel i7 PC with lots of RAM and a good graphics card, and a venerable yet strangely still competitive Intel Q6600PC (2008 vintage) with slower and less RAM and an older, slower graphics card. The old machine is my email and news sniffer machine always looking at the WWW. The i7 PC is often just doing testing and development of scenarios that I create for the simulator. From time to time I use the simulator ’s export/import process to transfer my test scenarios to the Q6600 PC and check that they run correctly. The old machine is very much at the bottom range of recommended systems needed to run the simulator and should be using lower display levels, shadows, viewing distance etc. I have found that the old machine works with no discernible difference even when set to the highest display levels. So, I investigated, and the result was what led me to the title of this item. “Ho Hum nothing to do ’ is a euphemism for the computer operating systems scheduler and my first involvement with schedulers at RRE Malvern way back in 1968. RRE was developing the Algol68R compiler. The 1907F had passed rigorous commissioning tests in September every peripheral was brand new and customer engineers had very little to do except scheduled weekly maintenance so, I was learning to program computers – a few Executive mends, just a few lines of code, hardly stuff to learn from so I used its Algol compiler to work out my expense sheet for mileage travelled on company business, which was complicated and needed to be worked out every week. I refined my program down to a single statement with lots of declarations, subroutines and a simple data file about 200 lines of actual Algol instructions and when compiled about 6Kw of object code. It often required changes as I was not that good at writing programs so it needed to be re-compiled weekly! This I did after the RRE work had ended or before RRE staff started work, depending upon which shift I was working that week. I was therefore running this compilation in a very powerful dual processor (1907F) computer that was doing nothing else. Well most of the time; occasionally after a consult with the RRE people as to why my program failed to compile, or gave rubbish answers, for which they were extremely appreciative, may I add as I was throwing up all sorts of usage problems with their compiler. I noticed that the total elapsed time to compile my thousand or so lines of Algol instructions was the same when running alongside the normal RRE work load and in my solitary runs in an empty machine. I bought this strange phenomenon to the attention of the RRE people and the George III support person (David Owen) and we discovered that the George scheduler which worked essentially on a per minute slot time was doing exactly that. My compile required just a few seconds of computer time but, was being given quite small scheduled time slots and between George III and the Executive which had a 200ms timer interrupt, were going into this “Ho Hum nothing to do ’ hiatus, where the Executive knew that it had no active process but had been told only to wake up George once a minute when the real time changed! This strange effect was quickly addressed and normal throughput at RRE increased slightly and my expenses program compiled in seconds rather than minutes in an empty machine. This brings me back full circle to the remarkable performance of my venerable Intel Q6600 PC. I turned on Windows 10 Pro Task Manager ’s performance monitoring. When running my most demanding scenario with intensive display and AI loads, on the i7 It was using about 41% CPU the Q6600 was using about 75% CPU. I have not investigated further but, it would seem that “Ho Hum nothing to do ’ is alive and well in Windows 10 Pro. |

Contact details

Readers wishing to contact the Editor may do so by email to

Members who move house or change email address should go to

Queries about all other CCS matters should be addressed to the Secretary, Rachel Burnett atrb@burnett.uk.net, or by post to 80 Broom Park, Teddington, TW11 9RR. |

50 Years ago .... From the Pages of Computer WeeklyBrian AldousFerranti win Argus orders from W Germany: Two large export orders for the supply of Argus 500 computer control systems to Kalle AG, a major West German chemical manufacturer, have been received by the automation systems division of Ferranti Ltd. The first order covers equipment to enhance the direct digital control capability of an existing Argus 500 system as well as providing for the simultaneous running of off-line programs. The other order is for a much bigger dual processor Argus 500 direct digital control system. (CW 4/6/70 p2) ICL install Etom 2000 at Stevenage: Forming part of the on-line phase of ICL ’s project on automated drawing office procedures which is under development at Stevenage, an Etom 2000 CRT display has been installed, linked via a 7020 terminal to a 1904E. Graphic Displays Ltd of Luton, which is now part of Kode International, now has five orders for the low cost Etom 2000, which ranges in price from about £4,100 for a basic system to £8,500 for a system which includes features such as a light pen and vector and character generation. (CW 4/6/70 p3) CDC goes after Business Market: Ever since the introduction of the CDC 7600 computer it has been Control Data ’s avowed intention to extend its customer base from the scientific research establishments, educational institutions and public utilities which still account for the bulk of its installations, to the larger commercial and industrial concerns. Now this policy has been taken a step further with a series of announcements designed to demonstrate the flexibility of the computing power which Control Data can provide, and the ease with which users of 6000 series computers can switch to the larger 7600 machines. (CW 11/6/70 p1) A Major Role for Computers on Election Night: Assessing the impact of politics in computers has become in recent months, with the machinations of Sub-committee D, almost a full-time occupation. But with June 18th only a week away, it might be appropriate to take a look at the impact of computers in politics. Perhaps the area in which the public will be most conscious of computing in the general election will be on election night itself. As usual the BBC and ITN networks will be staging an all-night election circus, each with its own unique selling points to entice the viewers away from the other. Electronic aids, following recent precedent, will play a large part in this. Somewhat paradoxically, both networks will be using the same bureau, Baric Computing Services, as the basis of their computerised voting analysis and results forecasting operation. Beyond this, however, the two systems will not converge; Baric is supplying two teams to develop the different systems, and the BBC will use an ICL 1905F in London and an ICL 4/50 in Manchester, whereas ITN will be using Baric ’s KDF9 at Kidsgrove. (CW 11/6/70 p10) VDU System deals with orders from Booksellers: Orders sent to Oxford University Press from booksellers are now being processed on five new ICL visual display units which have been linked to an ICL 1901A at the OUP offices in Neasden. With the new system, the customer reference, the OUP reference and an individual order is typed on to the keyboard. Books are entered by the first four letters in the author ’s name and four letters of the title. The VDU displays either the only book on the file which corresponds to the key letters, or a list of all possible titles, so that the operator can select the correct one and key in the quantity ordered. (CW 11/6/70 p15) DEC extends range of PDP-11 Peripherals: The range of peripherals for the PDP-11 computer introduced earlier this year has been extended by Digital Equipment Co to include a magnetic tape unit, a disc unit and a lineprinter. The company has also introduced a new disc unit and a software monitor for its PDP-8 and PDP-12 computers. The tape unit for the PDP-11 consists of the TC11 control unit and the TU56 dual tape transport. The TU56 can store up to 262,000 16-bit words on four-inch reels, and employs a type of redundancy recording in which each bit of data is stored on two tracks. The TC11 controller permits direct memory parallel-word access, and provides for bidirectional search, reading and writing of data. (CW 25/6/70 p25) IBM introduces System 370 Models 155 and 165 first in powerful new range: With the announcement of a new range of computers to be known as the System 370, and the introduction of two central processors in that range, IBM has at last pricked the bubble of rumour and speculation which has for many months surrounded any mention of its forthcoming products. First deliveries of the two new computers, which are known as Models 155 and 165, are scheduled to take place in February and April respectively next year. System 370 computers will be manufactured in both the US and Europe. The 155 will be produced at Poughkeepsie, New York, and at Montpellier in France, while the 165 will be produced at Kingston, New York, and Havant in the UK. The 165 is, according to IBM, the most powerful IBM computer ever to be manufactured in Europe and Mr E.R.Nixon, managing director of IBM UK, said he anticipated it would “make a very positive contribution to our balance of payments”. The smaller of the two new computers, the 370/155, is up to four times as fast as the 360/50. The main store, which has a cycle time of two microseconds, ranges in capacity from 256K bytes to 2,048K bytes – substantially larger than the 512K bytes which is the maximum core size of the current 360/50. The 370/165, which operates at five times the speed of the 360/65, is also fitted with two microsecond core-store, in this case ranging in size from 5I2K bytes to 3,072K bytes. (CW 2/7/70 p1) GPO studies plan for Integrated Network: A comprehensive and detailed proposal for a future integrated data communications system, incorporating both store and forward techniques and high-speed circuit switching, has been devised by Standard Telecommunications Laboratories and British Telecommunications Research in studies commissioned by the Post Office. The suggested system would be based on 20 to 30 special computerised data switching exchanges located in major cities. It would be digital and synchronous throughout, and would utilise the pulse coded modulation digital transmission technique envisaged for telephony transmission. Thus it would employ the existing network of lines and cables. The long-term intention of the Post Office, according to the study contractors ’ proposals, should be full interworking within a digitised telecommunications network bearing both telephony and non-telephony data and other services. The proposed network could carry the traffic resulting from future expansion of the existing telex network, and any future new telex network. (CW 16/7/70 p1) Improved PDP-8 model has Faster Memory: The PDP-8/E, a new low-cost computer which will, in due course, replace both the PDP-8/I and the PDP-8/L, has been announced by Digital Equipment Company Ltd. Main features of the new machine are its re-designed architecture and the use of the MSI (Medium Scale Integration) technology as well as the TTL integrated circuits which are already used in the PDP-8/I. Some of the thinking which went into the PDP-11 appears to have influenced the design of the PDP-8/E: in particular the provision of an internal bus system called Omnibus, which allows the processor, memory modules and peripherals to be plugged into virtually any available space in the machine, closely resembles the Unibus system incorporated in the PDP-11. (CW 16/7/70 p20) Post Office sets up Trial Switched Network: Two important initial steps have been taken by the Post Office in its programme to meet the rapidly growing demand for data transmission facilities. This month the new Post Office Datel 48K service, the fastest data transmission service in Europe, which provides two-way transmission at 48 Kbps over rented wideband private lines, has come into operation. And even more important for the future, a trial switched network operating at the same speed between London, Birmingham, and Manchester, has been set up. The Post Office says that the success of the Datel 48K service will depend largely on the availability of suitable terminal equipment. The purpose of the trial network, therefore, is to give manufacturers the incentive to develop 48 Kbps terminal equipment compatible with Post Office equipment, and it provides a manually switched two-way 48 Kbps public transmission system and an optional simultaneous speech circuit between the cities. (CW 23/7/70 p32) £7.5m Contract for GEC firm: Five GEC-Elliott Process Automation March 2140 processors, worth about £1,500,000, will be delivered as part of the £7.5 million contract awarded to GEC Electrical Projects Ltd by the British Steel Corporation. The contract covers equipment for the BSC ’s new three million-tons-a-year bloom and billet mill at Scunthorpe, which will be the largest in the UK and one of the largest in the world, forming a key part of BSC ’s £130 million development at Scunthorpe. GEC Electrical Projects will be responsible for the supply, erection, and commissioning of the electrical drives and the computer control system for the mill, which is due to be fully operational by the end of 1972. The five 2140s will be interlinked in a two-level hierarchy formation in which one co-ordinating processor will link the production through the entire bloom and billet mill complex, and in which individual processors will provide control of primary and secondary bloom mills, a ten-stand continuous billet mill, and two flying shears. (CW 30/7/70 p16) Siemens system to control London traffic: An important Greater London Council contract for the automation of traffic control in central London has been awarded to the German company, Siemens, despite two British tenders from Plessey and GEC Elliott Traffic Automation. The order, valued at £759,000, is for two Siemens 306 processors of 32K and 48K 24-bit word capacity respectively. The configuration will also include two disc units, a lineprinter, punched card peripherals, and data transmission equipment which will be used to transmit signals to the 300 sets of traffic signals. The project will cover an area in the heart of London, between Victoria (in the south and west), Marylebone Road (north) and Tower Bridge (east). Data on the occupancy of roads within the network, traffic flow, and traffic speeds will be collected by an estimated 500 induction loop detectors, buried a few inches beneath the surface of the roads. The project – which is known as CITRAC, for Central Integrated Traffic Control – is due to go into operation in 1973, although much of the equipment will be installed next year. The GLC plans to build a control centre which will house not only the computers, but also the police supervisors who will be responsible for taking action in the event of abnormal conditions. The equipment will initially be installed at County Hall. (CW 13/8/70 p1) |

Forthcoming EventsReaders will, we feel sure, understand the difficulties in planning meetings for the coming season which was scheduled to commence in September. As things stand at the time of writing, there are no meetings planned in Manchester and, although there is a provisional programme of London meetings, there is considerable doubt that any face-to-face meetings can take place in the current climate. However, our energetic Meetings Secretary, Roger Johnson is working towards being able to arrange events which can be attended online. As soon as the mist clears and concrete arrangements are made, we will publish details on our website at www.computerconservationsociety.org/lecture.htm. Meanwhile may we wish you all health, wealth and happiness, but most of all health? MuseumsSIM : Demonstrations of the replica Small-Scale Experimental Machine at the Science and Industry Musuem in Manchester are run every Tuesday, Wednesday, Thursday and Sunday between 12:00 and 14:00. Admission is free. See www.scienceandindustrymuseum.org.uk for more details. Bletchley Park : daily. Exhibition of wartime code-breaking equipment and procedures, plus tours of the wartime buildings. Go to www.bletchleypark.org.uk to check details of times, admission charges and special events. The National Museum of Computing Open Tuesday-Sunday 10.30-17.00. Situated on the Bletchley Park campus, TNMoC covers the development of computing from the “rebuilt” Turing Bombe and Colossus codebreaking machines via the Harwell Dekatron (the world’s oldest working computer) to the present day. From ICL mainframes to hand-held computers. Please note that TNMoC is independent of Bletchley Park Trust and there is a separate admission charge. Visitors do not need to visit Bletchley Park Trust to visit TNMoC. See www.tnmoc.org for more details. Science Museum : There is an excellent display of computing and mathematics machines on the second floor. The Information Age gallery explores “Six Networks which Changed the World” and includes a CDC 6600 computer and its Russian equivalent, the BESM-6 as well as Pilot ACE, arguably the world’s third oldest surviving computer. The Mathematics Gallery has the Elliott 401 and the Julius Totalisator, both of which were the subject of CCS projects in years past, and much else besides. Other galleries include displays of ICT card-sorters and Cray supercomputers. Admission is free. See www.sciencemuseum.org.uk for more details. Other Museums : At www.computerconservationsociety.org/museums.htm can be found brief descriptions of various UK computing museums which may be of interest to members. |

North West Group contact details

|

||||||||||||

Committee of the Society

|

Computer Conservation SocietyAims and ObjectivesThe Computer Conservation Society (CCS) is a co-operative venture between BCS, The Chartered Institute for IT; the Science Museum of London; and the Museum of Science and Industry (MSI) in Manchester. The CCS was constituted in September 1989 as a Specialist Group of the British Computer Society (BCS). It thus is covered by the Royal Charter and charitable status of BCS. The objects of the Computer Conservation Society (“Society”) are:

Membership is open to anyone interested in computer conservation and the history of computing. The CCS is funded and supported by a grant from BCS and donations. Some charges may be made for publications and attendance at seminars and conferences. There are a number of active Projects on specific computer restorations and early computer technologies and software. Younger people are especially encouraged to take part in order to achieve skills transfer. The CCS also enjoys a close relationship with the National Museum of Computing.

|